Projects

A project is analogous to an AI feature in your application. Some customers create separate projects for development and production to help track workflows. Projects contain all experiments, logs, datasets and playgrounds for the feature.

For example, a project might contain:

- An experiment that tests the performance of a new version of a chatbot

- A dataset of customer support conversations

- A prompt that guides the chatbot's responses

- A tool that helps the chatbot answer customer questions

- A scorer that evaluates the chatbot's responses

- Logs that capture the chatbot's interactions with customers

Project configuration

Projects can also house configuration settings that are shared across the project.

Tags

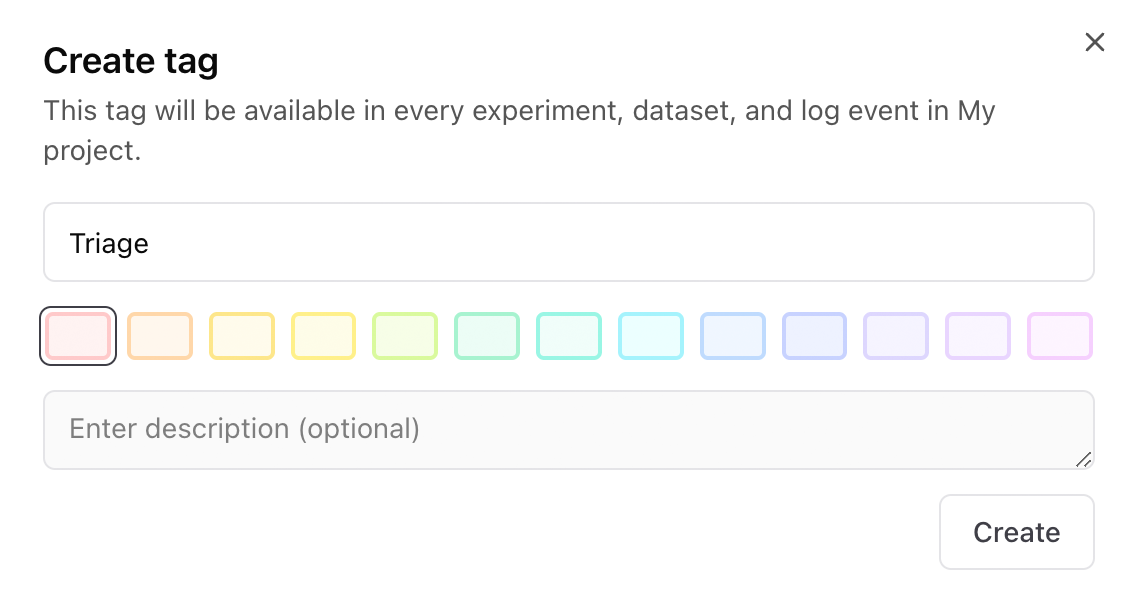

Braintrust supports tags that you can use throughout your project to curate logs, datasets, and even experiments. You can filter based on tags in the UI to track various kinds of data across your application, and how they change over time. Tags can be created in the Configuration tab by selecting Add tag and entering a tag name, selecting a color, and adding an optional description.

For more information about using tags to curate logs, see the logging guide.

Human review

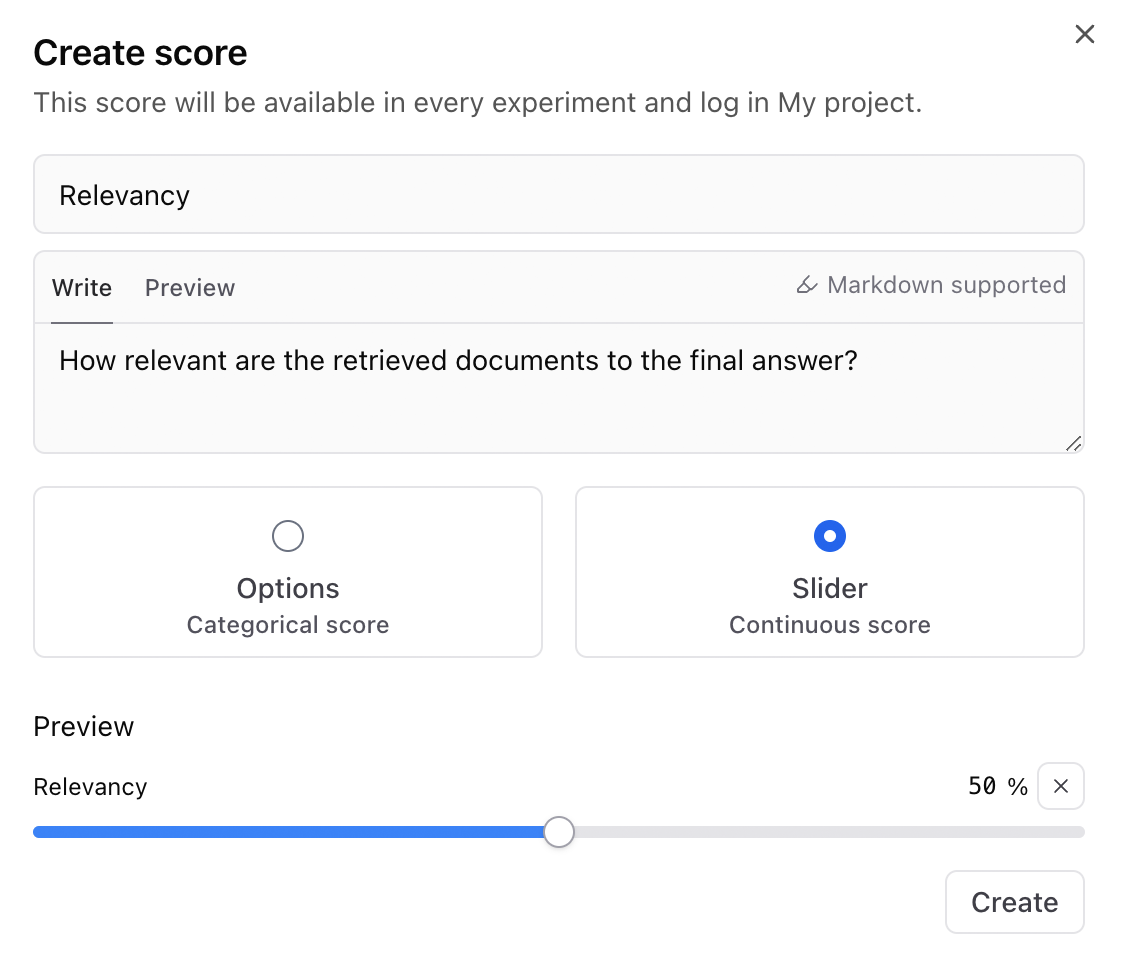

You can define scores and labels for manual human review, either as feedback from your users (through the API) or directly through the UI. Scores you define on the Configuration page will be available in every experiment and log in your project.

To create a new score, select Add human review score and enter a name and score type. You can add multiple options and decide if you want to allow writing to the expected field instead of the score, or multiple choice.

To learn more about human review, check out the full guide.

Aggregate scores

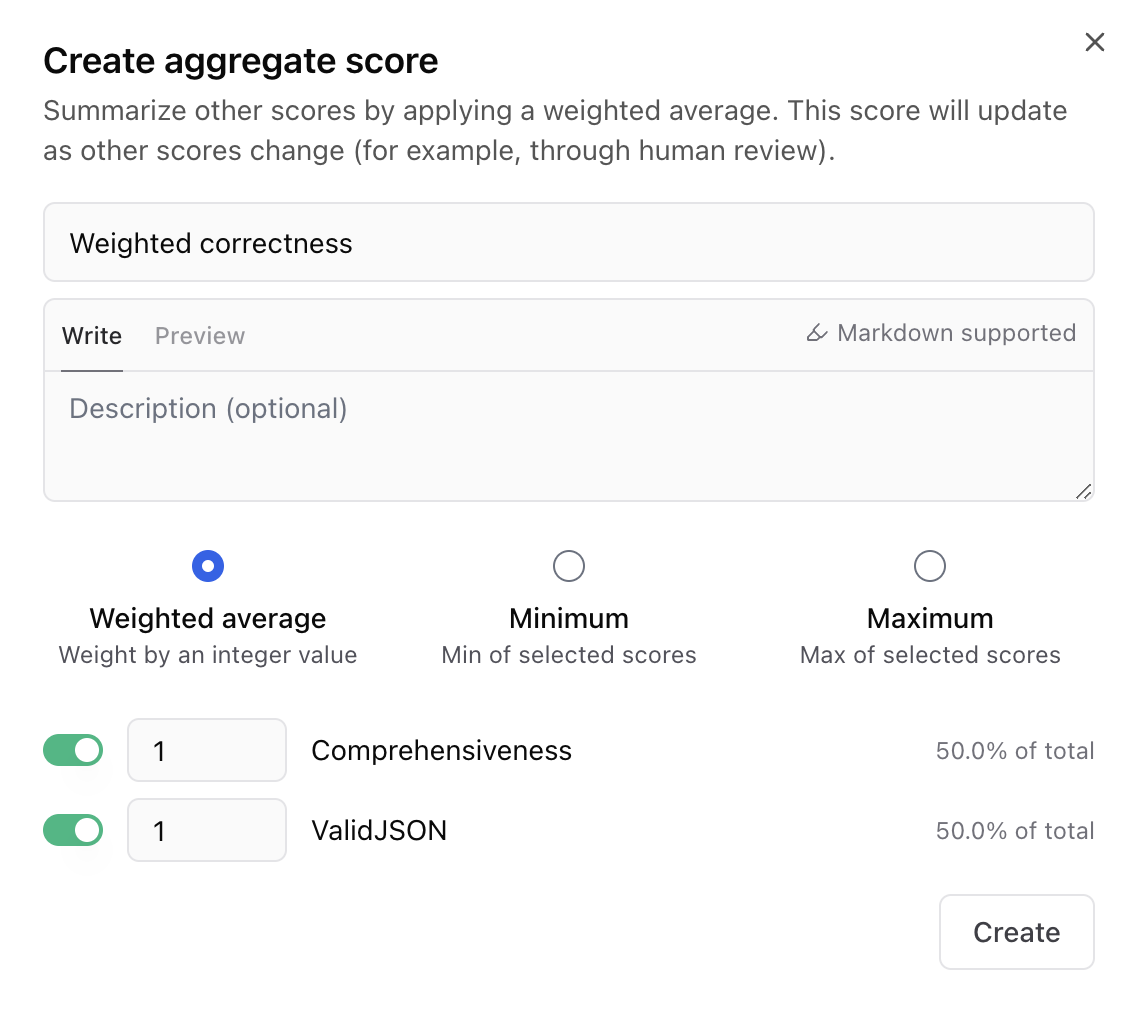

Aggregate scores are formulas that combine multiple scores into a single value. This can be useful for creating a single score that represents the overall experiment.

To create an aggregate score, select Add aggregate score and enter a name, formula, and description. Braintrust currently supports three types of aggregate scores:

Braintrust currently supports three types of aggregate scores:

- Weighted average - A weighted average of selected scores.

- Minimum - The minimum value among the selected scores.

- Maximum - The maximum value among the selected scores.

To learn more about aggregate scores, check out the experiments guide.

Online scoring

Braintrust supports server-side online evaluations that are automatically run asynchronously as you upload logs. To create an online evaluation, select Add rule and input the rule name, description, and which scorers and sampling rate you'd like to use. You can choose from custom scorers available in this project and others in your organization, or built-in scorers. Decide if you'd like to apply the rule to the root span or any other spans in your traces.

For more information about online evaluations, check out the logging guide.

Span iframes

You can configure span iframes from your project settings. For more information, check out the extend traces guide.

Comparison key

When comparing multiple experiments, you can customize the expression you're using to evaluate test cases by changing the comparison key. It defaults to "input," but you can change it in your project's Configuration tab.

For more information about the comparison key, check out the evaluation guide.

Rename project

You can rename your project at any time in the Configuration tab.