Eval playgrounds

Playgrounds are a powerful workspace for rapidly iterating on AI engineering primitives. Tune prompts, models, scorers and datasets in an editor-like interface, and run full evaluations in real-time, side by side.

Use playgrounds to build and test hypotheses and evaluation configurations in a flexible environment. Playgrounds leverage the same underlying Eval structure as experiments, with support for running thousands of dataset rows directly in the browser. Collaborating with teammates is also simple with a shared URL.

Playgrounds are designed for quick prototyping of ideas. When a playground is run, its previous generations are overwritten. You can create experiments from playgrounds when you need to capture an immutable snapshot of your evaluations for long-term reference or point-in-time comparison.

You can try the playground without signing up. Any work you do in a demo playground will be saved if you make an account.

Creating a playground

A playground includes one or more evaluation tasks, one or more scorers, and optionally, a dataset.

You can create a playground by navigating to Evaluations > Playgrounds, or by selecting Create playground with prompt at the bottom of a prompt dialog.

Tasks

Tasks define LLM instructions. There are four types of tasks:

-

Prompts: AI model, prompt messages, parameters, and tools.

-

Agents: A chain of prompts.

-

Remote evals: Prompts and scorers from external sources.

-

Scorers: Prompts or heuristics used to evaluate the output of LLMs. Running scorers as tasks is useful to validate and iterate on them.

Note the difference between scorers-as-tasks and scorers used to evaluate tasks. You can even score your scorers-as-tasks in the playground.

An empty playground will prompt you to create a base task, and optional comparison tests. The base task is used as the source when diffing output traces.

When you select Run (or the keyboard shortcut Cmd/Ctrl+Enter), each task runs in parallel and the results stream into the grid below. You can also choose to view in list or summary layout.

AI providers must be configured before playgrounds can be run.

For multimodal workflows, supported attachments will have a preview shown in the inline embedded view.

Scorers

Scorers quantify the quality of evaluation outputs using an LLM judge or code. You can use built-in autoevals for common evaluation scenarios to help you get started quickly, or write custom scorers tailored to your use case.

To add a scorer, select + Scorer and choose from the list or create a custom scorer.

Datasets

Datasets provide structured inputs, expected values, and metadata for evaluations.

A playground can be run without a dataset to view a single set of task outputs, or with a dataset to view a matrix of outputs for many inputs.

Datasets can be linked to a playground by selecting existing library datasets, or creating/importing a new one.

Once you link a dataset, you will see a new row in the grid for each record in the dataset. You can reference the

data from each record in your prompt using the input, expected, and metadata variables. The playground uses

mustache syntax for templating:

Each value can be arbitrarily complex JSON, for example, {{input.formula}}. If you want to preserve double curly brackets {{ and }} as plain text in your prompts, you can change the delimiter tags to any custom

string of your choosing. For example, if you want to change the tags to <% and %>, insert {{=<% %>=}} into the message,

and all strings below in the message block will respect these delimiters:

Dataset edits in playgrounds edit the original dataset.

For scorers-as-task

When evaluating scorers in the playground, ensure that your dataset input schema adheres to scorer convention. Like when a scorer is used on a prompt or agent, the input to the scorer should have the shape { input, expected, metadata, output }.

Unlike other task types, those reserved dataset keywords are hoisted into the global scope, meaning you can use your saved scorers in the playground and reference variables without any changes.

For example, to tune a scorer with the prompt:

Then, your dataset rows should look something like:

Running a playground

To run a playground, select the Run button at the top of the playground to run all tasks and all dataset rows. You can also run a single task individually, or run a single dataset row.

Viewing traces

Select a row in the results table to compare evaluation traces side-by-side. This allows you to identify differences in outputs, scores, metrics, and input data.

From this view, you can also run a single row by selecting Run row.

Diffing

Diffing allows you to visually compare variations across models, prompts, or agents to quickly understand differences in outputs.

To turn on diff mode, select the diff toggle.

Creating experiment snapshots

Experiments formalize evaluation results for comparison and historical reference. While playgrounds are better for fast, iterative exploration, experiments are immutable, point-in-time evaluation snapshots ideal for detailed analysis and reporting.

To create an experiment from a playground, select + Experiment. Each playground task will map to its own experiment.

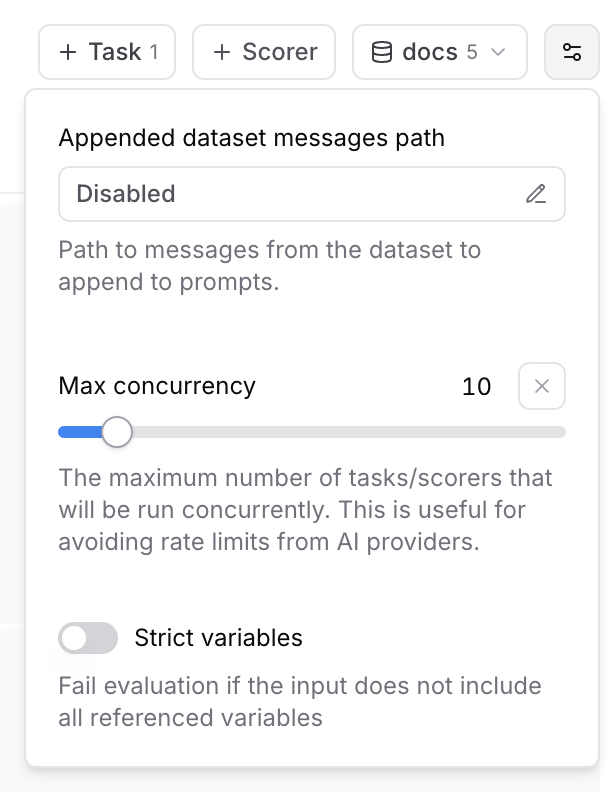

Advanced options

Appended dataset messages

You may sometimes have additional messages in a dataset that you want to append to a prompt. This option lets you specify a path to a messages array in the dataset. For example, if input is specified as the appended messages path and a dataset row has the following input, all prompts in the playground will run with additional messages.

To append messages from a dataset to your prompts, open the advanced settings menu next to your dataset selection and enter the path to the messages you want to append.

Max concurrency

The maximum number of tasks/scorers that will be run concurrently in the playground. This is useful for avoiding rate limits (429 - Too many requests) from AI providers.

Strict variables

When this option is enabled, evaluations will fail if the dataset row does not include all of the variables referenced in prompts.

Collaboration

Playgrounds are designed for collaboration and automatically synchronize in real-time.

To share a playground, copy the URL and send it to your collaborators. Your collaborators must be members of your organization to view the playground. You can invite users from the settings page.

Reasoning

If you are on a hybrid deployment, reasoning support is available starting with v0.0.74.

Reasoning models like OpenAI’s o4, Anthropic’s Claude 3.5 Sonnet, and Google’s Gemini 2.5 Flash generate intermediate reasoning steps before producing a final response. Braintrust provides unified support for these models, so you can work with reasoning outputs no matter which provider you choose.

When you enable reasoning, models generate "thinking tokens" that show their step-by-step reasoning process. This is useful for complex tasks like math problems, logical reasoning, coding, and multi-step analysis.

In playgrounds, you can configure reasoning parameters directly in the model settings.

To enable reasoning in a playground:

- Select a reasoning-capable model (like

claude-3-7-sonnet-latest,o4-mini, orpublishers/google/models/gemini-2.5-flash-preview-04-17(Gemini provided by Vertex AI)) - In the model parameters section, configure your reasoning settings:

- Set

reasoning_effortto low, medium, or high - Or enable

reasoning_enabledand specify areasoning_budget

- Set

- Run your prompt to see reasoning in action