Building secure and scalable production apps with OpenAI’s Realtime API

In early October, OpenAI released the Realtime API, designed for building advanced multimodal conversational experiences. This API enables rich AI applications with features like speech-to-speech interaction and simultaneous multimodal output. However, there are three key pain points that need to be solved before you can use the API to build secure and scalable production applications:

- User-facing credentials

- Logging

- Evaluations

At Braintrust, we want building AI applications with the most cutting-edge models to be a simple, wonderful developer experience. Today, we’re excited to announce support for the Realtime API via the Braintrust AI proxy, and solutions to these specific pain points.

Infrastructure challenges

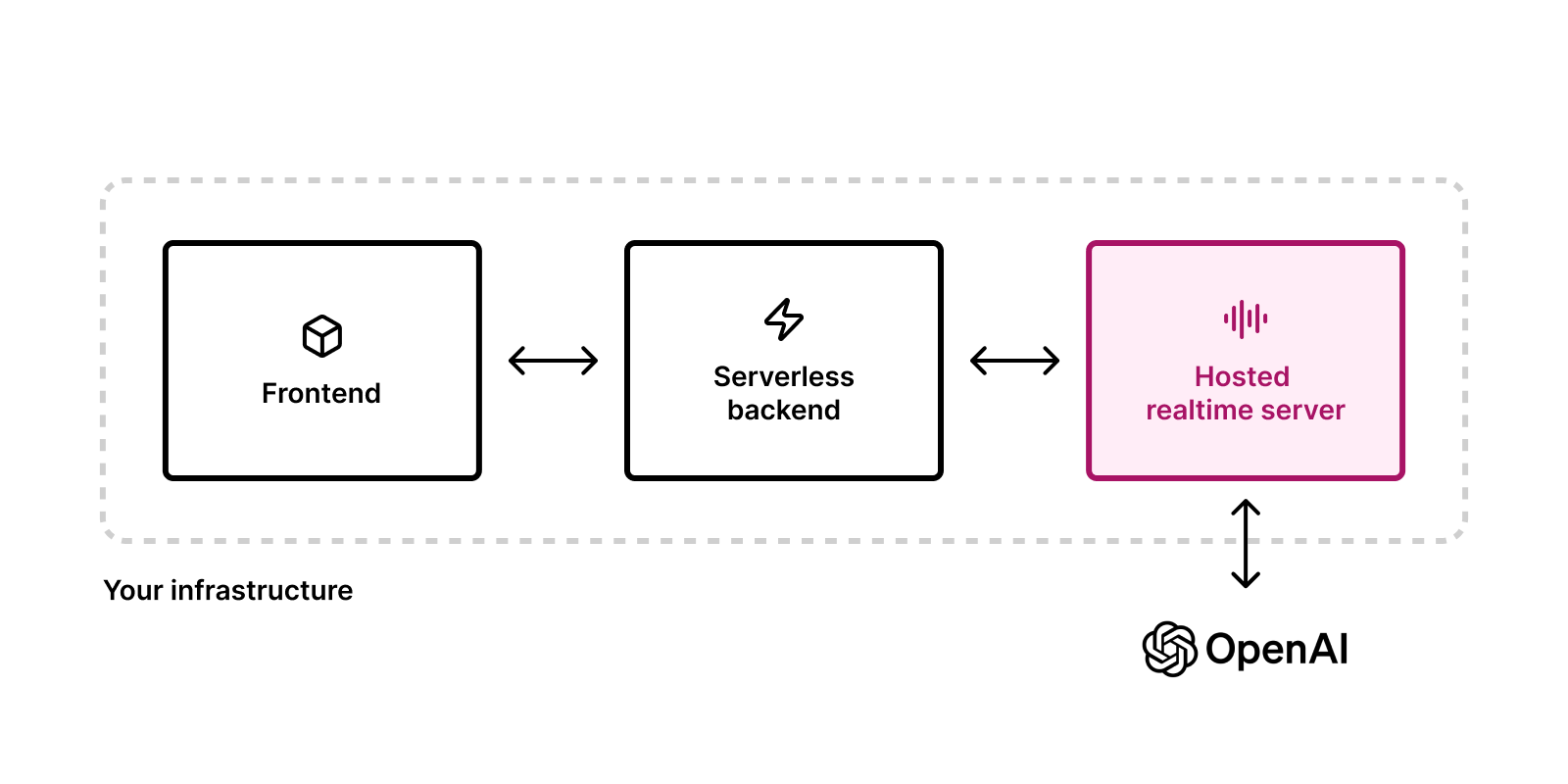

The OpenAI Realtime API is built on WebSockets to enable a responsive user experience. However, if you’re using a serverless backend like Vercel or AWS Lambda, which do not support WebSockets, it’s impossible to connect to the API without hosting a separate server somewhere else.

The API also currently lacks client-side authentication, making it insecure to connect to the API directly from the user’s browser.

The architecture requires developers to solve these problems by setting up a separate, long-running Node.js relay server. The relay server runs the provided relay.js code and holds an OpenAI API key to handle Realtime API connections. Running a separate server complicates your architecture, but the only alternative—storing your API key in the frontend—isn’t secure for production.

Because all Realtime API calls need to pass through the relay, it also has to scale up quickly to handle your app’s traffic without impacting responsiveness. We believe that developers would rather focus on the features that make their app unique, rather than infrastructure scaling.

Using the AI proxy

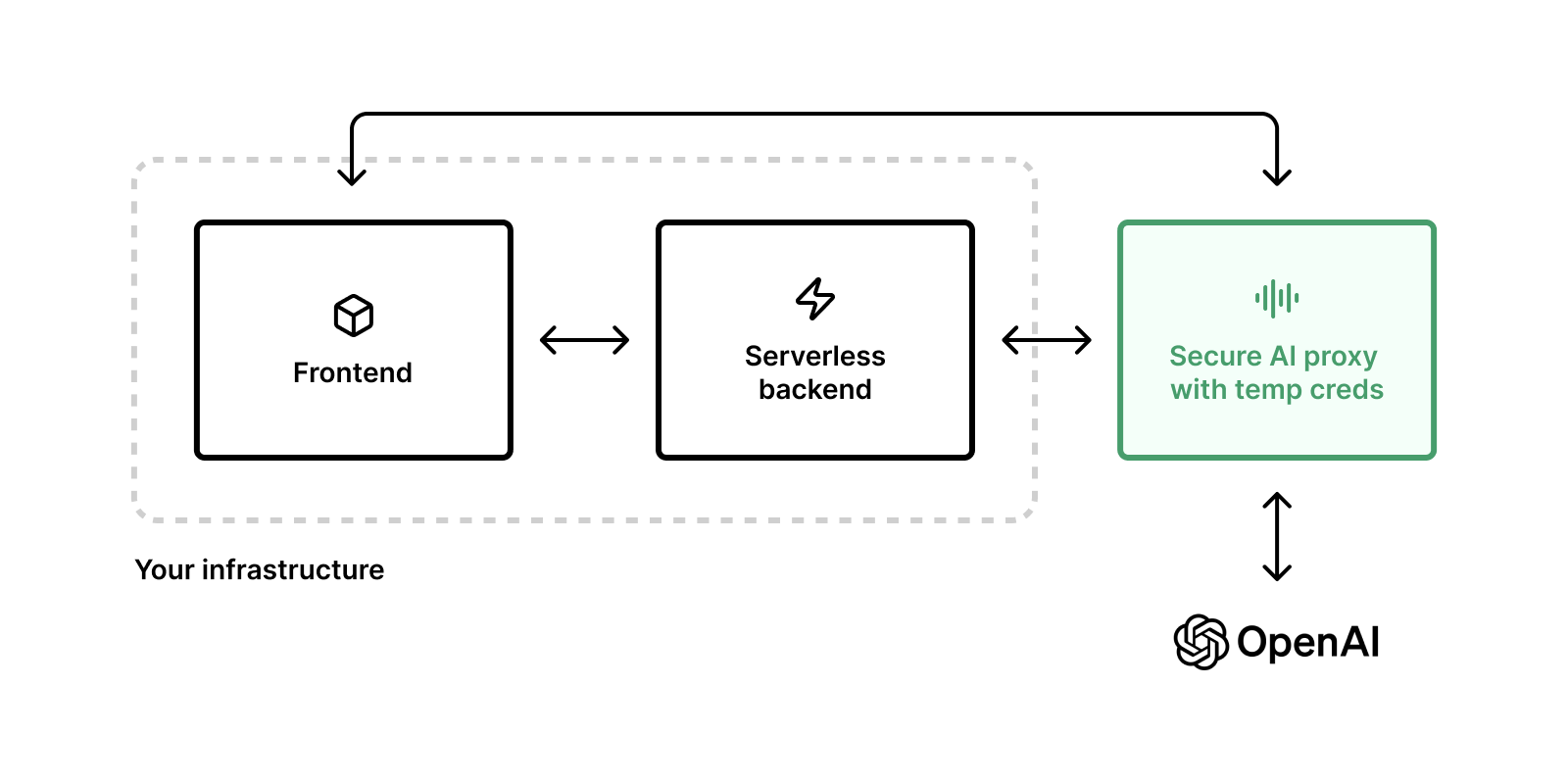

To address this operational complexity, we rearchitected a solution using our existing AI proxy. This way, you won’t need to embed your OpenAI API key directly into your backend, and you can continue using whichever serverless platform you’re used to using for building AI applications.

The AI proxy securely manages your OpenAI API key, issuing temporary credentials to your backend and frontend. The frontend sends any voice data from your app to the proxy, which handles secure communication with OpenAI’s Realtime API. This offloads the infrastructure burden to us, and allows you to focus on building your app.

Configuring your app

To access the Realtime API through the Braintrust proxy, change the proxy URL when instantiating the RealtimeClient to https://braintrustproxy.com/v1/realtime.

As an example, we forked the sample app from OpenAI and hooked it up to our proxy with just a couple of lines of code:

Next, generate a Braintrust temporary credential, to be used instead of the OpenAI API key. This means your OpenAI API key will not be exposed to the client.

You can also use our proxy with an AI provider’s API key, but you will not have access to other Braintrust features, like logging.

Logging

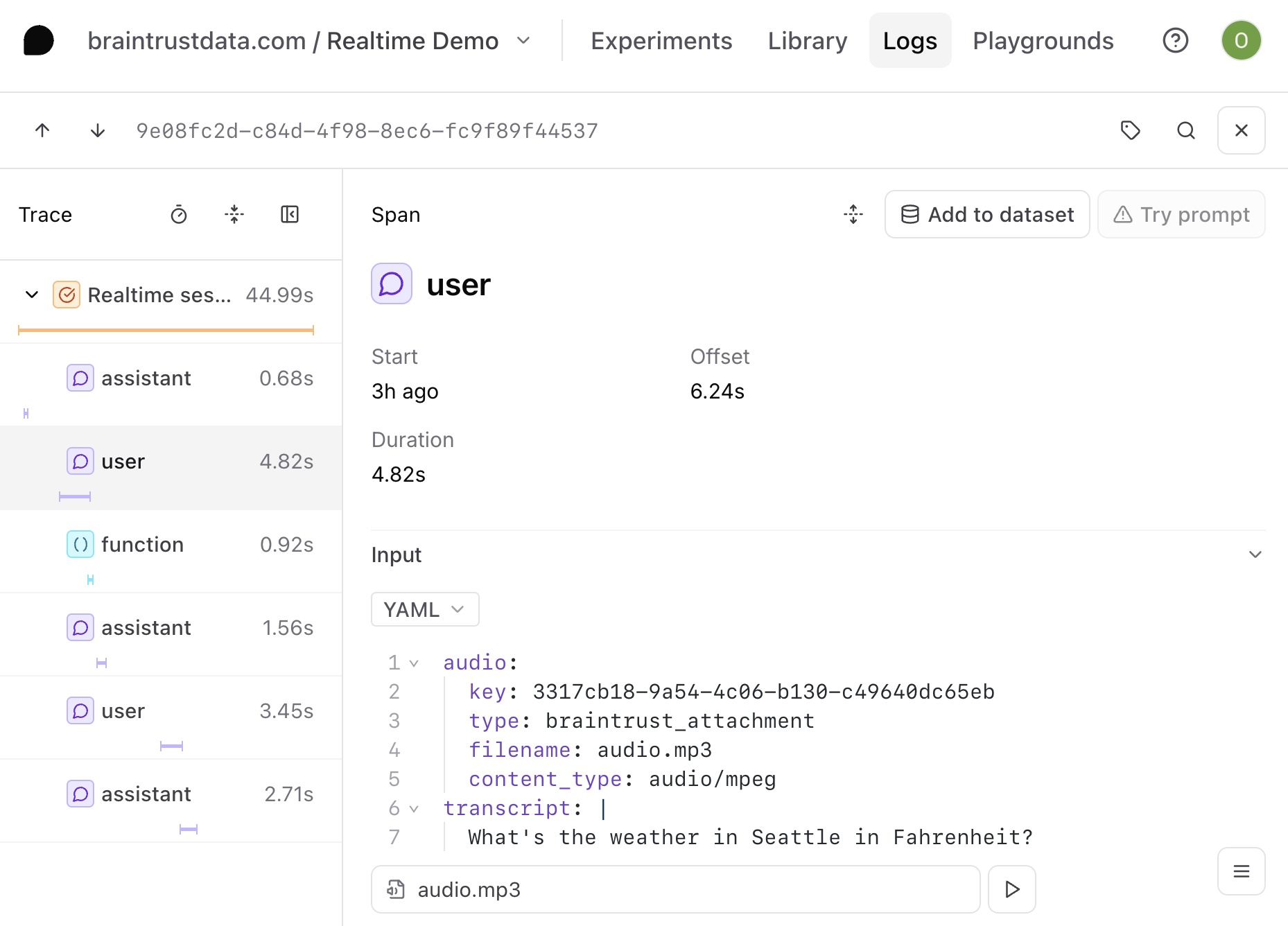

In addition to client-side authentication, you’ll also get the other benefits of building with Braintrust, like logging, built in. We support logging audio, as well as text, structured data, and images. When you connect to the Realtime API, a log will begin generating, and when your session is closed, the log will be ready to view. Each LLM and tool call will be contained in its own span inside of the trace. And most importantly, all multimodal content is now able to be uploaded and viewed as an attachment in your trace. This means that you won’t have to exit the UI to double-click on an LLM call and view the input and output, no matter what type of format its in.

What’s next

You can use this open-source repo as a starting point for any projects using the Realtime API. Because your app will automatically generate logs in Braintrust, you will have data in exactly the right format to run evaluations.

Try building with the OpenAI Realtime API today and let us know what you create!